Paper: https://arxiv.org/abs/1904.06037

Direct speech-to-speech translation with a sequence-to-sequence model

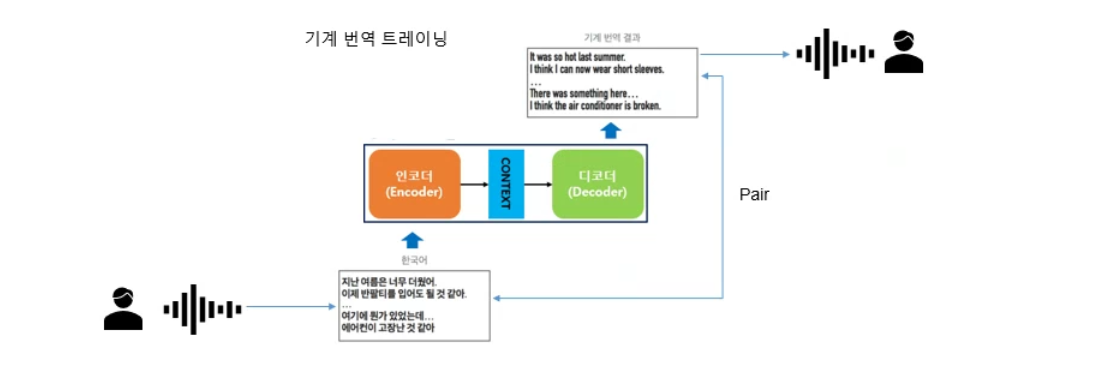

We present an attention-based sequence-to-sequence neural network which can directly translate speech from one language into speech in another language, without relying on an intermediate text representation. The network is trained end-to-end, learning to

arxiv.org

Authors: Ye Jia *, Ron J. Weiss *, Fadi Biadsy, Wolfgang Macherey, Melvin Johnson, Zhifeng Chen, Yonghui Wu.

Abstract: We present an attention-based sequence-to-sequence neural network which can directly translate speech from one language into speech in another language, without relying on an intermediate text representation. The network is trained end-to-end, learning to map speech spectrograms into target spectrograms in another language, corresponding to the translated content (in a different canonical voice). We further demonstrate the ability to synthesize translated speech using the voice of the source speaker. We conduct experiments on two Spanish-to-English speech translation datasets, and find that the proposed model slightly underperforms a baseline cascade of a direct speech-to-text translation model and a text-to-speech synthesis model, demonstrating the feasibility of the approach on this very challenging task.

결과는 여기서 들으면 된다.

https://google-research.github.io/lingvo-lab/translatotron/

Speech-to-speech translation

Audio samples from "Direct speech-to-speech translation with a sequence-to-sequence model" Paper: arXiv Authors: Ye Jia *, Ron J. Weiss *, Fadi Biadsy, Wolfgang Macherey, Melvin Johnson, Zhifeng Chen, Yonghui Wu. Abstract: We present an attention-based seq

google-research.github.io

'Paper Review' 카테고리의 다른 글

| [논문리뷰] A Survey of Active Learning for Text Classification using Deep Neural Networks - arXiv, 2020. (0) | 2021.12.24 |

|---|

댓글